Blogs.

[ Back to Home ]Most engineering teams progress through AI development stages from basic prompt usage to trustworthy AI workflows, layering autonomy only after proving workflows and AI guardrails at each step. This staged AI adoption approach fits how developers work inside IDEs and avoids risky jumps to fully autonomous AI agents.

More Stories

Data-Flow Transparency in AI Coding Assistants: Cloud vs On-Device

Data-flow transparency is critical for secure and responsible AI development. Understanding how AI coding assistants handle your code, prompts, and metadata helps avoid accidental exposure of sensitive information and ensures compliance with enterprise privacy standards.

Model Context Protocol: Universal AI Integration Standard

MCP: The Universal Port for AI Tools - and Why On-Device Servers Win on Privacy

Beyond Lines of Code: Measuring AI Impact with Business-Aligned KPIs

AI impact measurement demands objective, business-aligned AI KPIs rather than superficial productivity statistics. While minutes saved or lines of code generated make compelling headlines, they rarely correlate with genuine business value—and can actively drive counterproductive behaviors like code bloat or excessive AI dependency without quality validation.

From Chat to Change: How Agentic AI in the IDE Becomes the Safest Path to Enterprise Impact

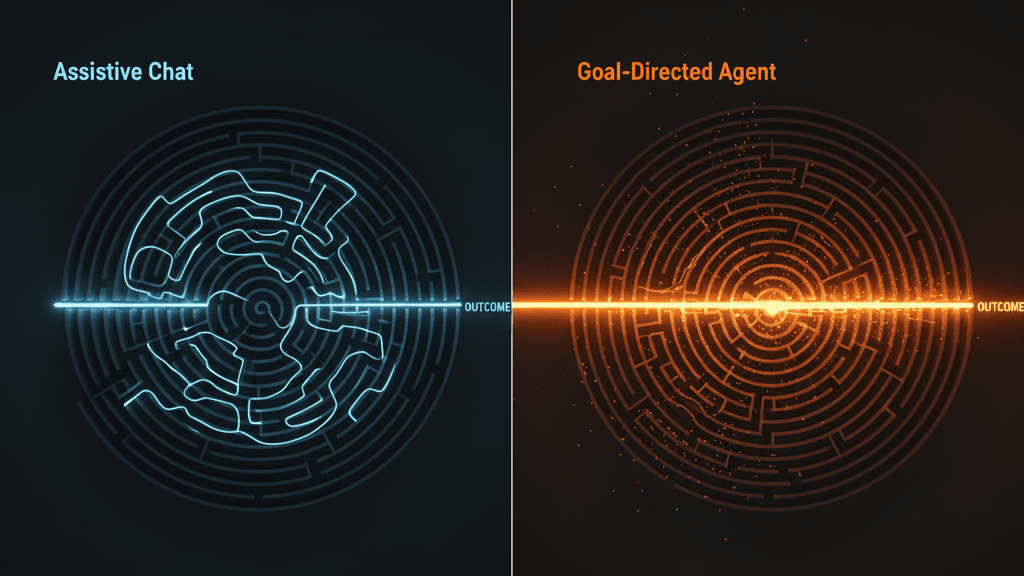

The trajectory of agentic AI adoption signals a fundamental shift in how enterprises approach artificial intelligence. By 2028, analysts forecast that autonomous AI systems will handle a meaningful share of work decisions and power core business applications—moving beyond conversational assistants to goal-directed AI execution that delivers measurable outcomes.

Breaking the GenAI Paradox: How Autonomous AI Agents Drive Measurable Enterprise Impact

Agentic AI in enterprises represents a fundamental shift from assistive chat interfaces to goal-directed AI systems that plan, execute, and coordinate across business workflows. Industry analysts forecast that by 2028, a significant share of enterprise applications will incorporate autonomous AI agents, marking the maturation from experimental copilots to outcome-oriented AI agents embedded in core decision flows.

Don't Pick a Side: Orchestrate Generalists for Breadth and Specialists for Outcomes

The debate between AI generalist vs AI specialist models misses a fundamental truth: enterprise teams don't need to choose—they need orchestration. While generalist AI models provide versatility for exploration and cross-domain tasks, specialist AI models deliver the precision and compliance required for high-stakes workflows. The winning enterprise AI strategy leverages both.

From Opinions to Outcomes: Using RLHF Signals to Raise Code Quality - Without Exporting Your Repo

Reinforcement learning from human feedback (RLHF) has transformed how AI models learn from human preferences, turning broadly capable language models into helpful, steerable assistants. While OpenAI's InstructGPT demonstrated RLHF's power at scale, enterprise teams need privacy-preserving model training that keeps proprietary code and feedback signals secure.

ICL First, Fine-Tune When It Sticks: A Pragmatic Path to Reliable AI Coding in the Enterprise

Choosing between fine-tuning vs in-context learning is one of the most critical decisions enterprise teams face when customizing AI coding assistants. Both LLM adaptation methods offer distinct advantages, but understanding when to use each approach determines whether your AI investment delivers rapid iteration or production stability.

Reasoning LLMs vs Auto-complete: Why Deliberate Models Win for Complex Code

The divide between reasoning LLMs and traditional autocomplete models is reshaping how developers approach complex coding tasks. While predictive models excel at quick completions, deliberate chain of thought LLMs - like OpenAI's o1 reasoning model -fundamentally rethink how AI assists with debugging, refactoring, and architectural decisions.